A couple of weeks ago, a friend retweeted the release announcement of Debian 10:

Debian 10 buster has been released! https://t.co/Qm1Oc7SXiF

— The Debian Project (@debian) July 7, 2019

First thought: it has taken a couple of years but here it is.

Second thought: these people know how to curate software… wait, my spider-sense is tingling crazy.

And then I realized the connection between how the Debian project solves certain projects around software ecosystem curation and some of the least happy parts of the life of the Scala developer: the trouble with libraries, mainly binary compatibility problems.

Library galore perils

It can be argued that the single factor that makes modern development–with Scala or otherwise–more productive is the extensive use of tons and tons of open source libraries. What in other times would have required months of development is today a handful of lines of code depending on tens of libraries1.

However, this powerful practice carries the seeds of important problems that can curtail its benefits. Out of the many possible perils of the profuse use of libraries, most of them are given short shrift by the development team as if they were not our responsibility. Take a look at the skeletons in our closet:

-

Licensing. There are many kinds of software licenses and more than one definition of open source out there2. Some of the licenses impose restrictions on the systems those libraries integrate to (e.g. GPLv3) and we cannot equate “I can download it from Maven central” with “I can use it freely”.

There is an SBT plugin to quickly check the licenses of your dependencies called sbt-license-report. Most of the licenses are detected and classified (e.g. Apache, GPL with Classpath Exception) but in some cases, the information is missing from Maven Central and you will need to do a manual check.

-

Library quality. Not all the code that is released as libraries meets the same standards of quality. Even when meeting high standards, the assumptions or the focus of the library can be far from the design of your application, making you work around the library design doing circuitous designs. Sometimes we want a banana, but the library we have found has a giant gorilla holding the banana. Think about this gorilla as concepts unrelated with your application and bugs (in the library or its transitive dependencies).

Adopting libraries should not be done blithely but after a cost-benefit analysis. Remember the Sturgeon’s revelation: 90% of anything, including libraries, is crap.

-

Security. It’s not just that we are inheriting bugs and design warts from the libraries we use, we are increasing the surface area for security.

A cautionary tale from last year happened around NPM, the main library repository for the Javascript ecosystem. Dominic Tarr, the maintainer of a widely used library, decided to open the project to more people because he wasn’t even using it anymore, he was doing it for free and someone volunteered to do it for him. Unfortunately, the person volunteering was a black hat hacker that released a version of the library with some code targeting Copay, a Bitcoin wallet, that indeed got released with the malicious code.

This kind of horror story is less likely to happen for libraries better staffed in which you need to have a track record to become a committer. However, in the long tail of libraries out there, don’t expect all projects to have these practices. In the end, the chain is as weak as the security of the weakest library you depend upon.

While it’s impossible to avoid not yet known security problems, aka zero-day vulnerabilities, these are reported by the security community to the CVE database that can be checked automatically from SBT with the sbt-dependency-check plugin. You can even make your build fail with the

dependencyCheckFailBuildOnCVSScommand. -

Compatibility issues. Unlike the previous ones, this problem can’t be overlooked because when we have a library compatibility problem, like the typical binary compatibility issue like the feared

IncompatibleClassChangeErrorour code refuses to work. You can ignore that one of your libraries has a license you are violating but not fixing a conflict of versions is like spurring a dead horse. Congratulations, you are enjoying a JAR Hell! Way more about this in the next section.

All these problems get amplified by the fact that when we decide to marry one library we are not marring just that library but all its family: potentially many transitive dependencies. You need to check the licenses of all those libs, evaluate their quality, monitor its CVE’s and pray for them not to have a version conflict with the other libraries you are using.

Compatibility problems

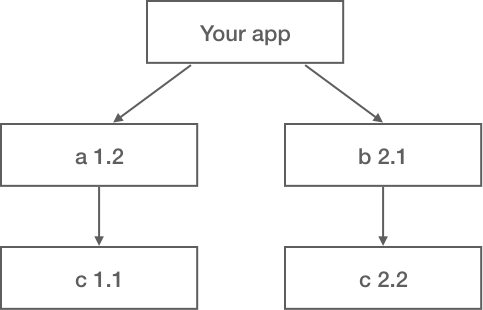

To illustrate why we have this library compatibility problem let’s use a simplified example. Imagine you have an application that has two direct dependencies libraries a and b. Both direct dependencies depend on library c but–to our despair–not the same version.

Dependencies as intended

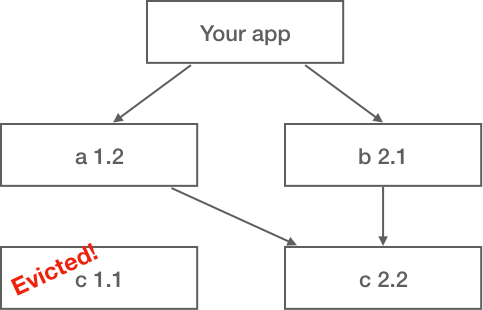

When we run our program, we cannot have two versions of the same library (unless using OSGi) and version 1.1 of c is evicted in favor of the other one.

Dependencies in runtime

When a dependency eviction is a problem? When the assumptions made by the code depending on the evicted library are not met by the one used in runtime. There are two types of problems:

-

Binary compatibility problems. Our Java/Scala code gets compiled to bytecode that invokes specific methods with some number of parameters of some definite types. If c 2.2 adds a new argument to some method that a is invoking, a

NoSuchMethodExceptionwill be raised. If the whole class has been renamed or removed, then aClassNotFoundExceptionone. And if a static method is converted to a normal one,IncompatibleClassChangeError. -

Semantic compatibility problems. Even if the public signatures of library c don’t change we can face compatibility problems because some assumptions are not captured by method signatures. For example, we might have a class in c with a constructor with default values that might change between versions completely changing what library a is asking c to do. This is trickier to diagnose that an

IncompatibleClassChangeErrors.

In an ideal world, we will have libraries with semantic versioning, which is a convention directed towards making our lives as consumers of libraries easier:

Given a version number MAJOR.MINOR.PATCH, increment the:

MAJOR version when you make incompatible API changes,

MINOR version when you add functionality in a backwards-compatible manner, and

PATCH version when you make backwards-compatible bug fixes.

If all libraries were following this versioning system it would be easier to manage evictions as you’ll be sure that c 1.2 could be safely replaced by c 1.9. However, it’s difficult that this ever happens because you can check binary compatibility in an automated fashion3 but semantic compatibility is more elusive.

Another practice that helps mitigate this problem is to reduce the number of dependencies by:

- Not using libraries unless they pay for its salt.

- Use of granular libraries. Having a “commons” library that depends on many, many other things is a really bad idea as you are bringing all those even if you just wanted a small piece of the functionality. It’s better to have “commons-streaming”, “commons-kafka”, etc.

- Relying on the platform. Can you use

java.timeinstead of a third-party library? Is anything solving my problem in the Scala collections library? By platform I don’t just mean the JDK and Scala’s library, this might include the libraries bundled with Spark or any other platform.

Solving compatibility problems

The typical modus operandi when facing a library compatibility problem is first, finding the libraries whose dependencies get evicted. Second, trying to bump or downgrade one of them in order for the dependencies to get close enough the problem is fixed. Third, if that doesn’t work, fork one of them to produce a custom version whose dependencies are aligned with the rest of our microcosm4.

Another solution consists of actually allowing two versions of a library in runtime. That is possible in the JVM because class management is delegated to class loaders. OSGi is a framework that leverages this to allow multiple versions of a library with some caveats. With OSGi, you need to annotate which packages of the library are public, being the rest private and not accessible (this plays very bad with reflection-based frameworks). As long as a library don’t expose any type of its dependencies in its public part that dependency can be hidden from other modules and you can have multiple versions of it.

Is then OSGi a solution? It is for most cases but you are paying for it with more complexity in dependency management, will have problems with anything relying on reflection and for some cases, you will need to still fork some libraries.

Another possible mitigation that doesn’t solve this problem but can greatly reduce its impact is the use of microservice architectures. In such architectures, each service has a narrow business purpose and that corresponds to a subset of the dependencies that the equivalent monolith whould have had. However, this is not automatically true as some microservice ecosystems tend to share many, many shared libraries and you get the worst of both worlds: lots of dependency conflicts and coupling like in the monolith case and you need to cope with distributed systems complexities as in any microservice ecosystem. This anti-pattern is known as the distributed monolith.

Is there any way out of the JAR Hell?

Lately, I’ve been wasting quite some time impeded by binary compatibility problems and I became close to convinced that they were an inevitable part of the life of the Scala programmer very much like NPEs for the Java programmer. Is there any solution? Should we suffer these problems as a way to atone our promiscuous library sins?

Should we act like the JAR Hell is inevitable?

A workable approach to these problems was hidden in plain sight! When I saw the Debian 10 release announcement I was thunderstruck by the revelation: Debian has been solving these problems for decades!

If you think about it, a Debian release is a set of packages (libraries) that comply with some license requirements5 and work well together. Those packages are curated by a bunch of maintainers that form a community and you need to be verified in person to get into (so it’s unlikely that something like the NPM problem happens to them).

A release of Debian is created by getting fresh versions of the core packages (kernel, libc, buildtools) and then, aligning everything else. They have tooling that makes very easy to manage patches on top of the original code (“upstream” in Debian parlance). Those patches are used to fix compatibility problems, make default behavior more Debian-like or fix other problems.

After the release is out, packages are frozen except for security patches. These are, by design, things you can trust to update automatically with confidence that nothing is going to break. Interfaces won’t change, configuration formats won’t change. You just get security patches. These are sometimes provided by the upstream developers and, other times by the Debian maintainers.

What happens if I need the latest version of a library for a release of Debian that has an older version? You can always compile it against what is shipped in that release. However, as this is very common, the work of changing dependencies and fixing compilation is shared in dedicated “backport” repositories.

Cool. Can I have this for Java/Scala? We have examples of limited versions of this. For instance,

the Sprint Boot community does this kind of curation for Spring-based applications. They

have dozens of “starter” modules that are a selection of concrete libraries in versions

that work well with each other. For instance, mix in spring-boot-starter-data-couchbase and

spring-boot-starter-data-cassandra and you won’t have compatibility problems. However, it is

useful only with the premise of building a Spring application and limited in scope.

A promising tool that is in development for the Scala ecosystem is Jon Pretty’s Fury, a replacement for SBT. An integral concept on Fury is the layer, “a collection of related projects you are working on” and each project can reference the upstream repository of an external library or an internal artifact. Layers can be potentially shared to form the basis for a curation similar to what the Debian project does.

Time to mature

Getting out of the JAR hell is a matter of maturity. Using so many libraries so liberally has multiplied developer productivity to a large extent but without the restraint of some curation culture like the one in Debian, its risks and practical problems might cancel out the advantages of having access to this galore of functionality.

Updated on 2019-08-17: mention microservices as a mitigation inspired by trylks’s comment

-

And it’s transitive dependencies! It’s not uncommon to have hundreds of them in a single small project. ↩

-

The original definition of free software by Richard Stallman and the FSF, and the more corporate-friendly, open source definition by the OSI. ↩

-

For example, the SBT’s plugin mima allows you to check for binary compatibility against previous versions of the library you are developing ↩

-

If you do this, please use good conventions to avoid confusing your coworkers. For example, if you fork some library in version 1.2.2 to align versions, release it on your private artifact repo as

1.2.2-our-companyinstead of1.2.3. The former is obviously some private customization and the later is bound to collide with the real thing soon enough. ↩ -

Open source. What happens with closed software? It’s not integrated by the Debian community but it benefits greatly of this model of releases because you just need to compile your libraries/programs against each recent Debian release and provide a repository URL. No need to create a binary that is statically linked against all libraries (this is analogous to the fat-jar anti-pattern). ↩